About us

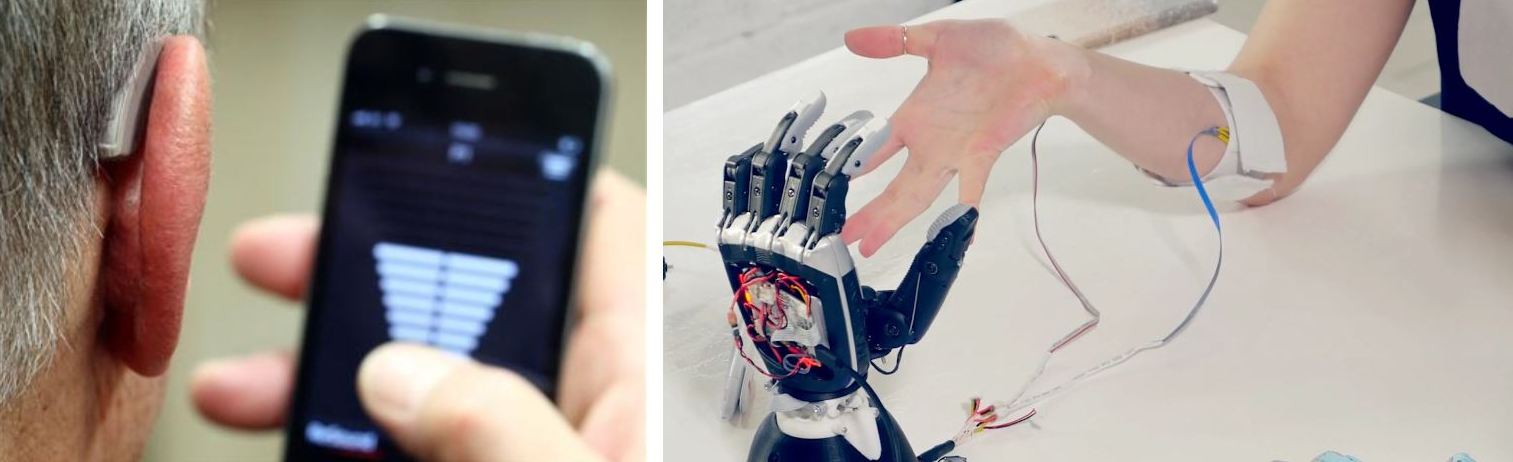

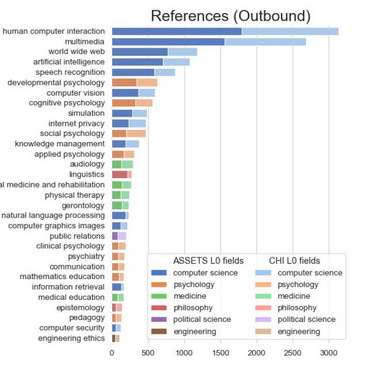

We are a research lab within the University of Michigan's Computer Science and Engineering department. Our mission is to deliver rich, meaningful, and interactive sonic experiences for everyone through research in human-computer interaction, audio AI, accessible computing, and sound UX. We have two research focuses: (1) sound accessibility, which includes designing computing systems and interfaces to deliver sound information accessibly and seamlessly to end-users, and (2) hearing health, which includes developing hardware, algorithms, and apps for next-generation earphones and hearing aids. Some key research questions include:

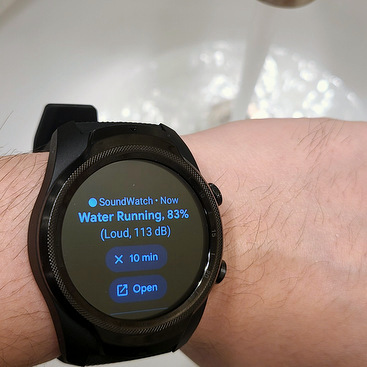

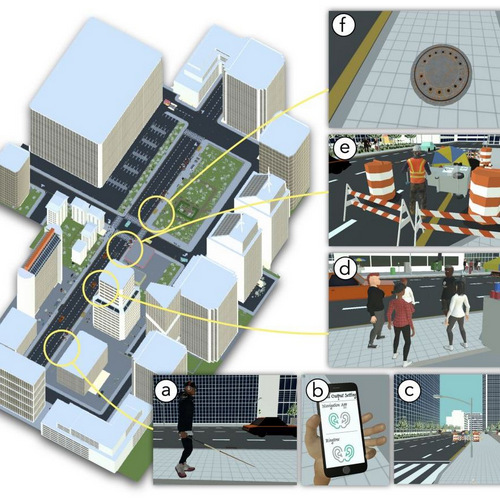

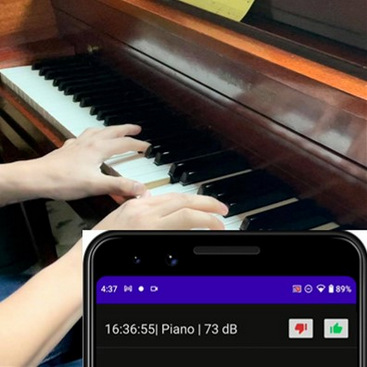

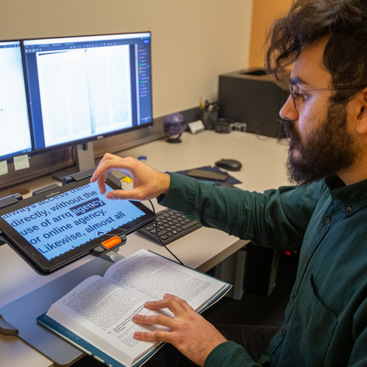

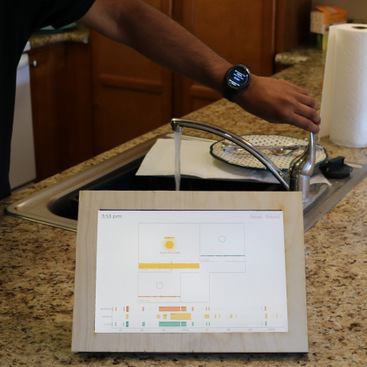

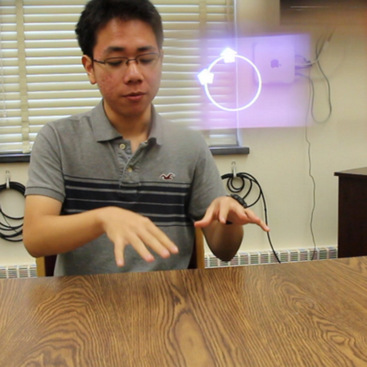

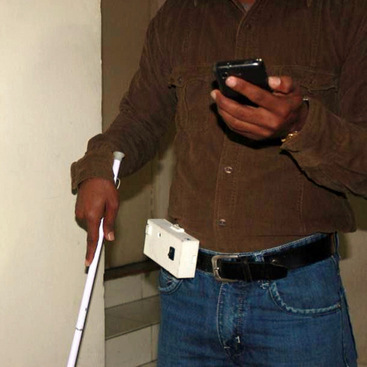

Intent-Driven Sound Awareness Systems. How can sound awareness technologies model user intent and deliver context-aware sound feedback?

Projects:

| AdaptiveSound

| ProtoSound

| HACSound

| SoundWeaver

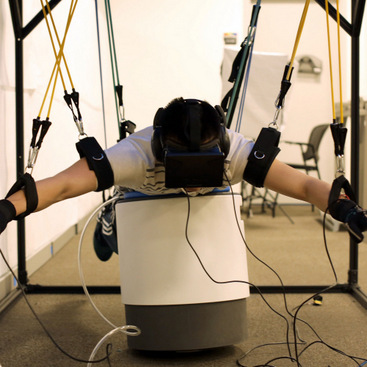

Media Sound Accessibility. How can generative AI improve the accessibility of sound in mainstream and emerging media?

Projects:

SoundVR

| SoundModVR

| SoundShift

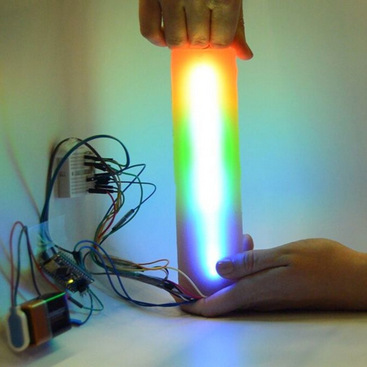

Next-Generation Hearing Aids & Earphones. How can future earphones extract desired sounds, suppress unwanted noise, and provide a seamless hearing experience? How can we dynamically capture audiometric data, model auditory perception, and diagnose hearing-related conditions on the edge?

Projects:

MaskSound

| SonicMold

| SoundShift

We embrace the term ‘accessibility’ in its broadest sense—not only creating tailored experiences for people with disabilities but also ensuring seamless and effortless information delivery for all users. We prioritize accessibility because it provides a glimpse into the future—people with disabilities have historically been early adopters of transformative technologies such as telephones, headphones, email, messaging, and smart speakers.

Our lab is primarily composed of HCI and AI experts. We collaborate with a diverse range of professionals—including healthcare practitioners, neuroscientists, audio and biomedical engineers, psychologists, Deaf and disability studies experts, statisticians, musicians, designers, and sound artists. By adopting a multistakeholder perspective, we work closely with community experts, end users, and organizations to tackle complex challenges—an approach that has consistently deliverable impactful, real-world solutions. Many of our innovations have been publicly released (e.g., one deployed app has over 100,000 users) and have directly influenced products at companies such as Microsoft, Google, and Apple. Our research has also earned multiple paper awards at premier HCI venues, been featured in leading media outlets (e.g., CNN, Forbes, New Scientist), and is included in academic curricula worldwide.

Our current impact areas span media accessibility (e.g., enhanced captioning for movies, developer toolkits for VR accessibility) and healthcare accessibility (e.g., technologies that support communication within mixed-ability physician teams, as well as models and algorithms for next-generation hearing aids). Building on this foundation, we envision a future where technology not only overcomes existing accessibility barriers but also expands human hearing itself—enabling highly personalized, seamless, and fully accessible soundscapes that dynamically adapt to users' intent, environment, and social context. We call this vision 'Auditory Superintelligence'.

If our vision and research areas resonate with you, please apply. We are actively recruiting students and postdocs to help shape the future of sound accessibility!

Recent News

Feb 19: Our initial work on enchancing communication in high-noise operating rooms has been accepted as a poster at Collaborating Across Borders IX!

Jan 16: Our proposal on dizziness diagnosis was approved for funding from William Demant Foundation! See news article.

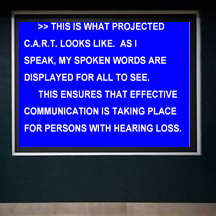

Oct 30: Our CARTGPT work received the best poster award at ASSETS!

Oct 11: Soundability lab students are presenting 7 papers, demos, and posters at the upcoming UIST and ASSETS 2024 conferences!

Sep 30: We were awarded the Google Academic Research Award for Leo and Jeremy's project!

Jul 28: Two demos and one poster accepted to ASSETS/UIST 2024!

Jul 02: Two papers, SoundModVR and MaskSound, accepted to ASSETS 2024!

May 22: Our paper SoundShift, which conceptualizes mixed reality audio manipulations, accepted to DIS 2024! Congrats, Rue-Chei and team!

Mar 11: Our undergraduate student, Hriday Chhabria, accepted to the CMU REU program! Hope you have a great time this summer, Hriday.

Feb 21: Our undergraduate student, Wren Wood, accepted to the PhD program at Clemson University! Congrats, Wren!

Jan 23: Our Masters student, Jeremy Huang, has been accepted to UMich CSE PhD program. That's two good news for Jeremy this month (the CHI paper being the first). Congrats, Jeremy!

Jan 19: Our paper detailing our brand new human-AI collaborative approach for sound recognition has been accepted to CHI 2024! We can't wait to present our work in Hawaii later this year!

Oct 24: SoundWatch received the best student paper nominee at ASSETS 2023! Congrats, Jeremy and team!

Aug 17: New funding alert! Our NIH funding proposal on "Developing Patient Education Materials to Address the Needs of Patients with Sensory Disabilities" has been accepted!

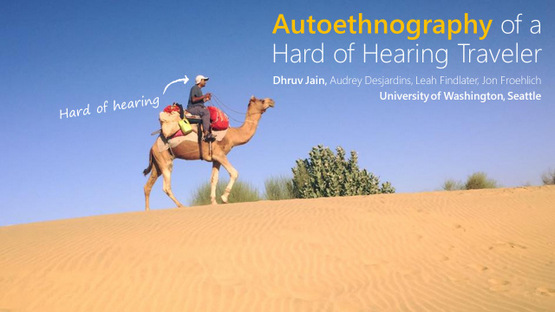

Mar 16: Professor Dhruv Jain elected as the inaugral ACM SIGCHI VP for Accessibility!